Does my face look odd in this? Staying anonymous with Fawkes cloaking tech

This is not the author. It was generated by thispersondoesnotexist.com.

I admitted to myself before I started writing that this probably wasn’t going to succeed. Bad habits were too ingrained for too long. The technology was too easy to use, and now I’m beyond redemption.

Between around 2013 when the first Android device arrived in the Crow’s nest and 2018 when I ditched Google entirely, I’ve uploaded around 12,000 photographs to Google. They’re pictures of myself, of my wife, of my kids, and of the landscapes and scenery where I live and where I visit.

Before that, I used to manually upload similar pics to Facebook, where I would dutifully tag myself, tag my friends, and help train the Facebook AI in recognising me, my friends, and my family to the extent that it probably no longer needs human input.

It’s not like either of the tech giants made my private photos public. I can’t type my name into Google images and see the thousands of images snapped over the years as I travelled the world.

But Google knows exactly what I look like. Facebook knows exactly what I look like. Intelligences owned by both companies have been trained on my features with the massive dataset I provided for them.

If an unknown stranger snaps a picture as I make my way around town or if I’m caught on one of the many security cameras which litter the towns and villages of the British Isles, and that photo makes its way back to one of the behemoths, I can be auto-tagged.

I don’t like this idea.

There’s no particular reason I want to flee from Facebook. Google isn’t gunning for me. I have nothing to hide.

But I don’t like it.

Privacy is now a privilege

The photos exist, and they’re out in the wild - during the (very extended) period when I was uploading to Facebook and Google, I didn’t read the Terms of service - dumb, I know, but I was young and naive. While I was vaguely aware that facial recognition would be improved using my pictures, I didn’t thoroughly think through the implications as they applied to the real world - only how cool it would be to not have to tag my pictures by hand. So, young, naive, and incredibly short-sighted to boot.

If you’re so inclined, and if you used the photo sharing platform, Flickr in the last decade or so, you can see which, if any of the facial recognition datasets - which are used by police, security service, and marketers across the world - are using the snaps you uploaded to the platform.

Hop across to Exposing.ai and plug in your Flickr username, photo URL, or #tag, and in a second or so, the tool will run through datasets from VGG Face, People in Photo Albums (PIPA), MegaFace, IARPA Janus Benchmark C, FaceScrub, and DiveFace.

It’s important to note that with the exception of MegaFace, Flickr is a minority contributor to these datasets. Most of the snapshots are acquired through other sources, and are used by commercial operators, police and governments.

Other people may have something to hide. Demonstrators in a crowd may have good reason to resent the fact that captured images of them can be run against the datasets, and their true identity revealed to the relevant authorities.

If you think that’s bad, it gets worse - Clearview AI, an unregulated service, is used by more than 600 law enforcement agencies and private companies in the US and around the world. Its facial recognition models were built using over 3 billion photos of people from the Internet and social media without their knowledge or permission.

The people in this shot from the pro-democracy protests of 2019 are probably feeling a little uneasy right now. | Credit: Studio Incendo / CC BY 2.0

But the truth is that it’s their own fault, in allowing their images to be associated with their identity, their online accounts, and every other aspect of their lives.

Fawkes is the digital equivalent of a false moustache

But you can’t go through life in disguise, donning a Guy Fawkes mask every time you shoot a selfie to send to your paramour. You’d look silly, and the recipient wouldn’t recognise you - which kind of defeats the point of taking the photograph in the first place.

It’s important that real people - including me - can recognise my beautiful visage in photographs, but I’d prefer that I couldn’t be recognised by machines.

Which is where a nifty piece of software called Fawkes comes in.

It was built by researchers at the University of Chicago specifically to baffle the AIs whose job it is to match a face to an identity, while keeping the subject recognisable to a human viewer.

Although Fawkes is as-yet unfinished, and the current release version is v0.31, tests have shown protection against “state-of-the-art facial recognition models from Microsoft Azure, Amazon Rekognition, and Face++ are at or near 100%.”

Fawkes runs locally on your computer, and doesn’t need to connect to the Chicago servers once you’ve installed it. I’m not going to attempt to explain how Fawkes works. I’m a man pretending to be a bird, writing humorous and mildly interesting articles for a general audience.

Here’s how its creators describe Fawkes face cloaking technology:

At a high level, Fawkes "poisons" models that try to learn what you look like, by putting hidden changes into your photos, and using them as Trojan horses to deliver that poison to any facial recognition models of you. Fawkes takes your personal images and makes tiny, pixel-level changes that are invisible to the human eye, in a process we call image cloaking.

You can then use these "cloaked" photos as you normally would, sharing them on social media, sending them to friends, printing them or displaying them on digital devices, the same way you would any other photo. The difference, however, is that if and when someone tries to use these photos to build a facial recognition model, "cloaked" images will teach the model an highly distorted version of what makes you look like you.

The cloak effect is not easily detectable by humans or machines and will not cause errors in model training. However, when someone tries to identify you by presenting an unaltered, "uncloaked" image of you (e.g. a photo taken in public) to the model, the model will fail to recognize you.

I recently had cause to upload a photograph of my face to a new work-related Slack group (Yes. The crow does have a day job (sort of)), and I thought this was the perfect opportunity to put Fawkes to the test.

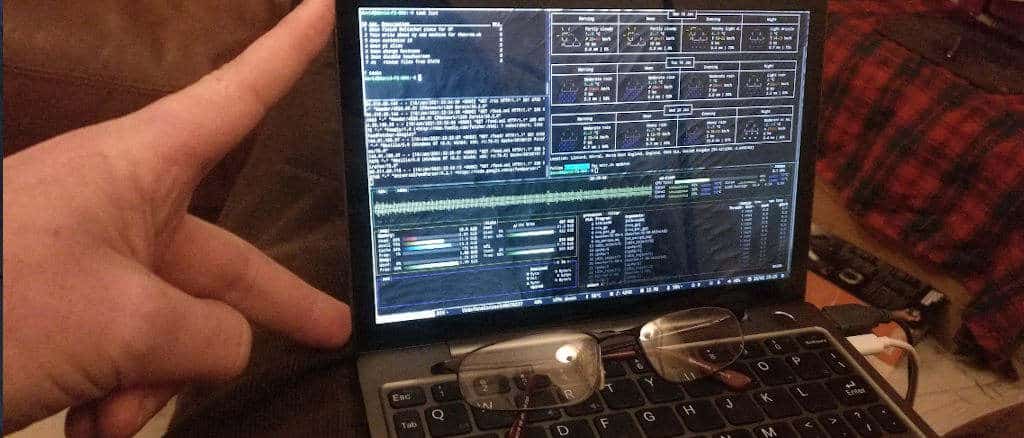

I cropped a headshot from a family picture, dumped it in the Fawkes directory, and let rip. The process took around a minute, and the fans of my teeny tiny laptop went into overdrive. This was for one cropped photo.

The result was OK. It was still very recognisably me, athough there was something slightly different around the eyes, and the resolution was slightly off. But it was definitely me, and if I had a mind to, I could hang it on my hallway wall. Guests would ask me, “Crow, why do you have a photograph of yourself on the hallway wall.” So yes, it holds up to being recognisable by people, but not recognisable by machine - which was the point of the exercise.

I’m not going to show you this photo, instead, here’s a Fawkes-treated example using AI generated images from thispersondoesnotexist.com.

Yes. There are visible differences, and some artefacts I’d prefer were not present, but it is still recognisably the same person, and remember - Fawkes is currently only on version 0.31. It’s a work in progress.

The fans kicked in after 18 seconds, and the entire process took 77.842498 seconds. That’s a lot for a single picture. If I were to apply cloaking to my entire photo album (kept locally, not online), my machine would need to be running Fawkes for 270 hours straight.

And there is a limit to how much Fawkes will help me, personally. I surrendered my 12,000 or so images to Facebook and Google years ago, and it’s safe to assume that they’re already part of the dataset.

Since then, I’ve disengaged from social media altogether. No photos of me have been uploaded in the last two years or so (at least by me).

To poison the model, I would need to re-engage. I would need a new Facebook account tied to my real name and identity and I would have to upload handfuls of Fawkes-doctored selfies and snapshots every day before it would begin to make a difference. I would be spamming Facebook, Google, Flickr, Pinterest, and every other outlet I could think of. It would be almost a full-time job, and I would be disclosing different information which would otherwise have remained private.

It’s a difficult one. All I can really do is run Fawkes going forwards, and write this article to help any other people who may benefit from it in the future.

Spread the word.

This site is hosted on a Raspberry Pi 4B in the author's living room (behind the couch). If you fancy building a website, but would prefer not to have hardware cluttering up your house, you can get reasonably priced hosting from BlueHost

On the other hand, if you're worried about being followed online, consider using PureVPN to cover your tracks.

These are affiliate links. Obviously. If you're feeling generous, you can buy me a coffee.